Research

Katori Laboratory is developing new information processing mechanisms and artificial intelligence that incorporate living brains’ sophisticated and flexible information processing mechanisms. Using mathematical sciences and computers as tools, we study new information processing mechanisms that utilize neural dynamics and nonlinear dynamics in the brain.

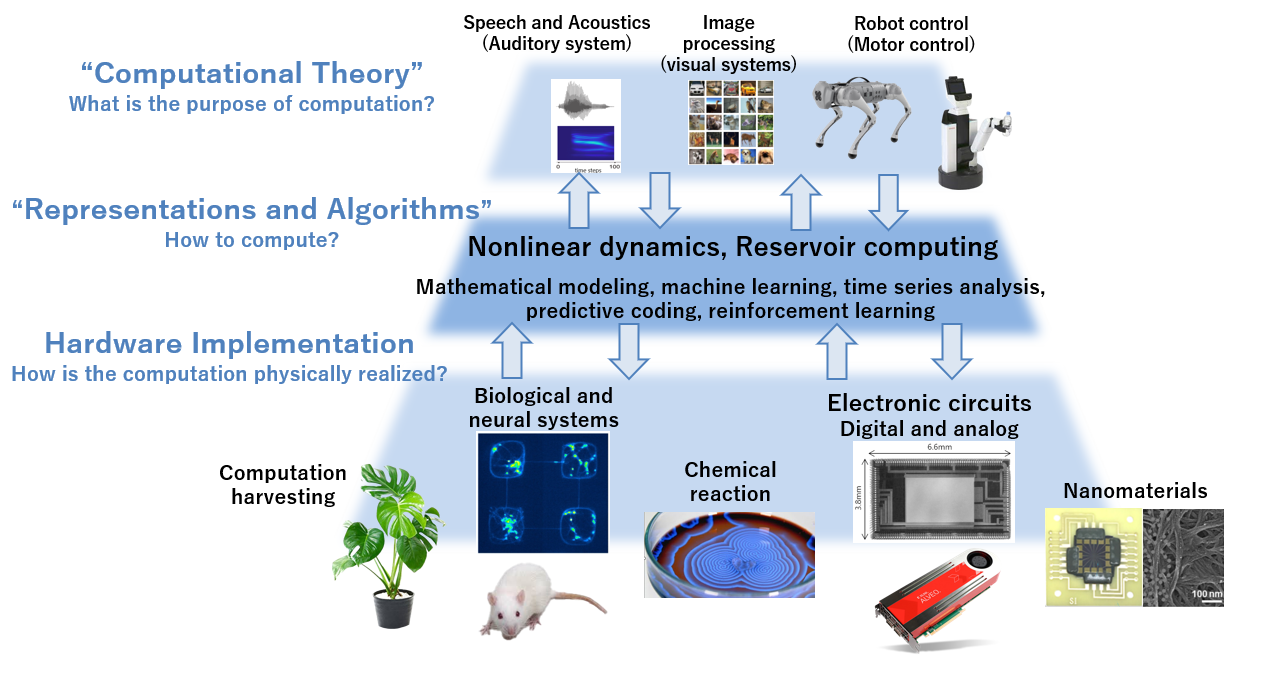

We organize the overall picture of our research into three levels (Marr 1982): “computational theory,” “representation and algorithms,” and “hardware implementation” (see the figure below). At the “computational theory” level, we study the purpose of computation; at the “representation and algorithms” level, we study the procedure of computation; and at the “hardware implementation” level, we study the physical realization of computation. Although each of the three levels can be a separate subject of research, integrating the three levels allows us to understand and study information processing deeply.

Our laboratory’s research can be organized into three levels as shown in the figure below. At the “Computational Theory” level, we focus on functions such as processing of audio and images, and robot control. At the “Representation and Algorithms” level, various mathematical methods centering on nonlinear dynamics are targeted. At the “Hardware Implementation” level, there are various subjects as depicted, including biological neural systems and electronic circuits.

Nonlinear dynamics become an essential means of representation; nonlinear dynamics serve as a common language for describing and representing various dynamical objects. By mathematically modeling the target system within the framework of nonlinear dynamics and analyzing it, we can gain a deep understanding of the system and feedback that knowledge to the target. Furthermore, by clarifying the conditions necessary for realizing the purpose of computation (function/application), we can create computational mechanisms more suited to the purpose. “Representation and algorithms,” centered on nonlinear dynamics, will bridge “computational theory” and “hardware implementation.” At Katori Laboratory, we are advancing research in collaboration with experts in each field related to “computational theory” and “hardware implementation.”

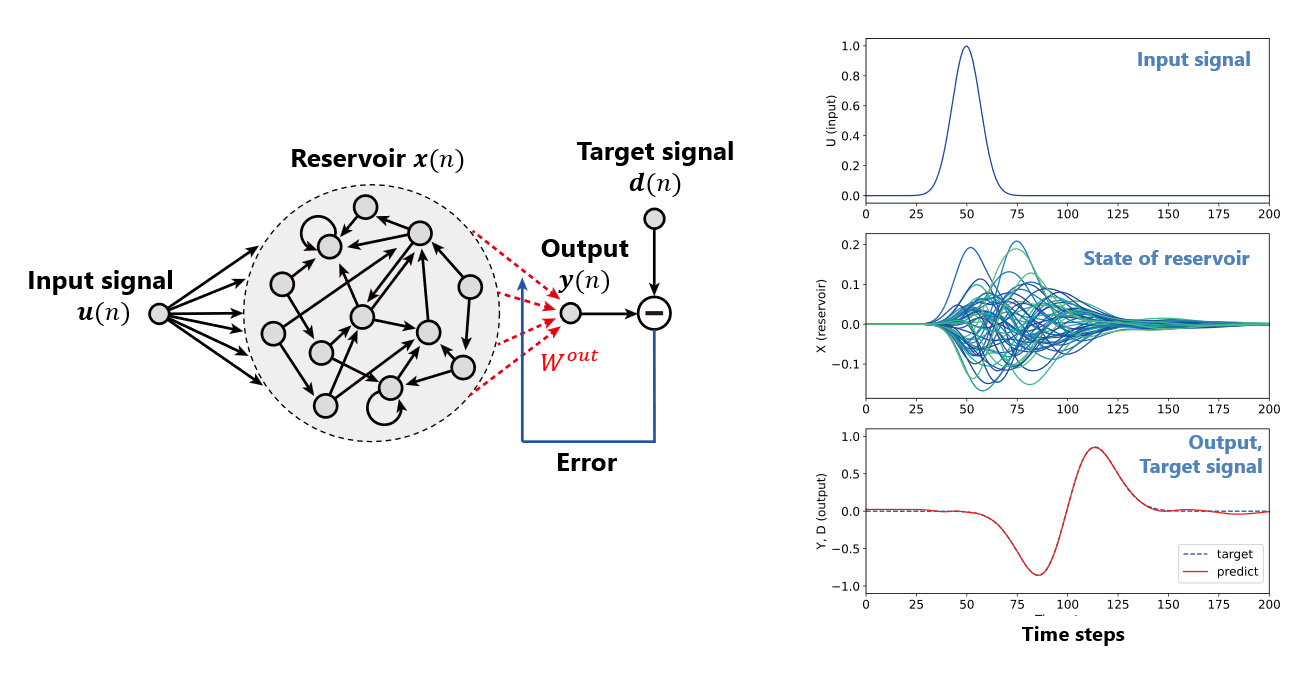

What is reservoir computing?

Reservoir computing is a pivotal research factor at Katori Laboratory. This computational method leverages transient oscillatory phenomena within a network of interconnected nonlinear elements, known as a reservoir. Upon receiving an external input signal, this network generates specific oscillatory patterns. Deciphering these patterns enables the production of time series data applicable to the task. This system’s efficiency in computation and learning costs is a salient feature. Moreover, its versatility is evident in its applications, ranging from time series generation and forecasting to pattern recognition and robot control. A particularly fascinating aspect is its adaptability across various physical systems, allowing for employing dynamics in electronic circuits, fluids, biological entities, and materials for reservoir computing.

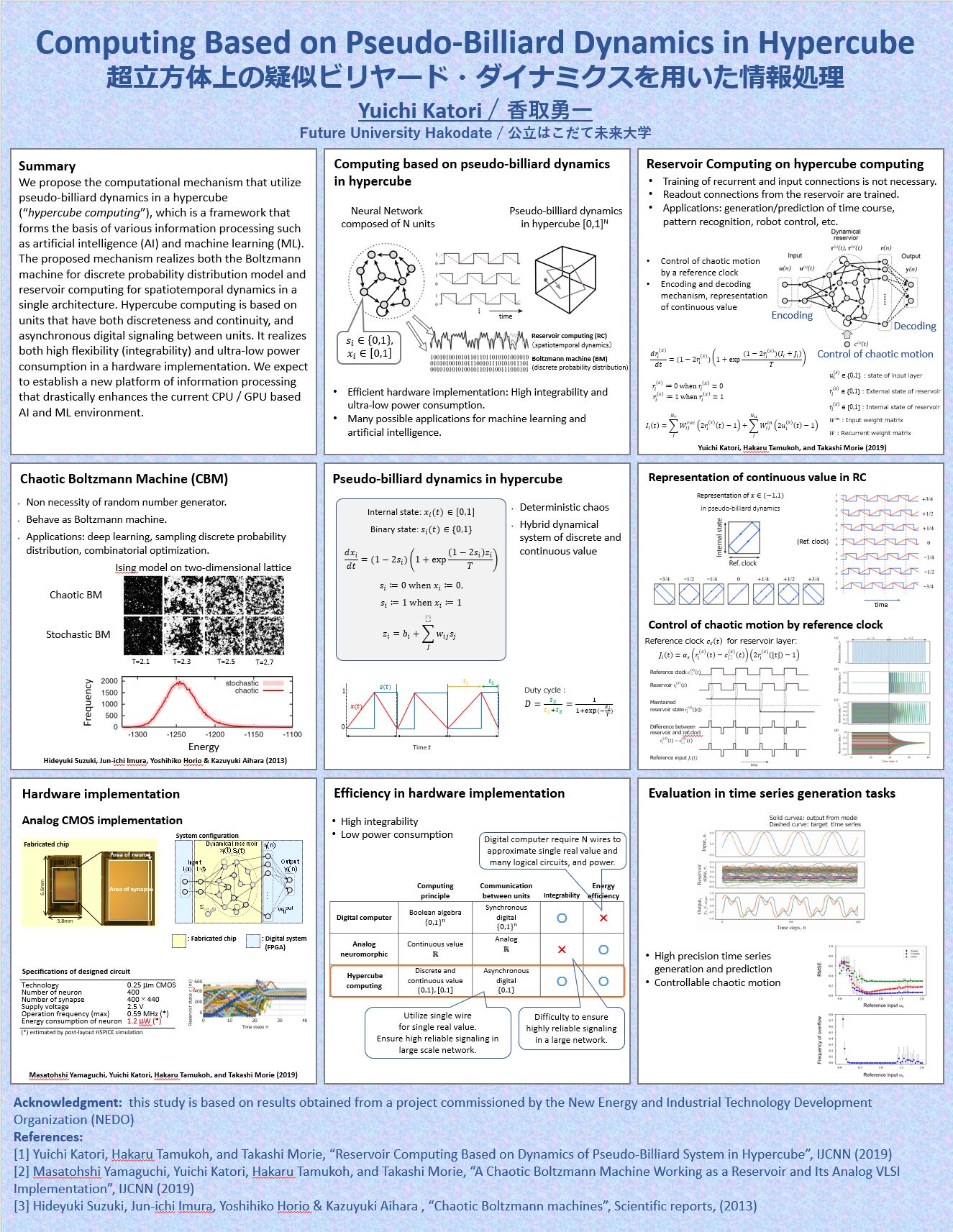

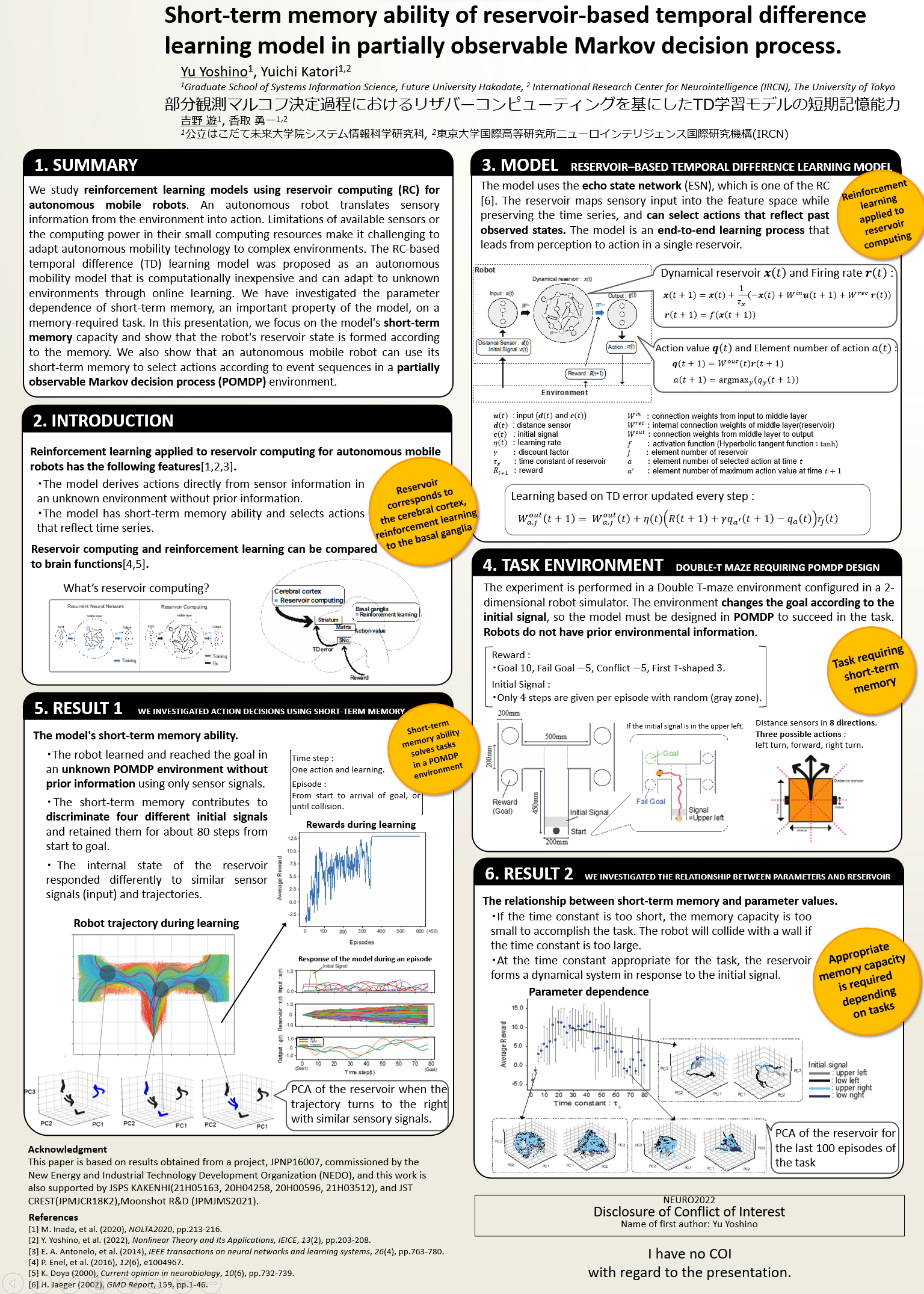

Information Processing Based on Pseudo-Billiard Dynamics on a Hypercube

We propose a novel idea of realizing reservoir computing using the complex reflective motion (pseudo-billiard dynamics) of nonlinear elements inside a hypercube. This mechanism, based on units that combine both discrete and continuous features (neurons), merges the best attributes of digital and analog in a hybrid information processing architecture, allowing for efficient hardware implementation. When implemented in analog circuits, the internal states of neurons are represented locally as analog physical quantities (voltage values), and inter-neuronal signal transmission is carried out by asynchronous digital signals, leading to significant reductions in required circuit resources and power consumption. This is also suitable for applications such as edge computing. This computational mechanism is capable of realizing both the discrete probability distribution model of Boltzmann machines and the spatiotemporal dynamics model of reservoir computing within a single architecture, offering various applications. The results of this study are attracting attention from the perspective of the application of nonlinear dynamics and are expected to have potential as a foundational technology for brain-like artificial intelligence.

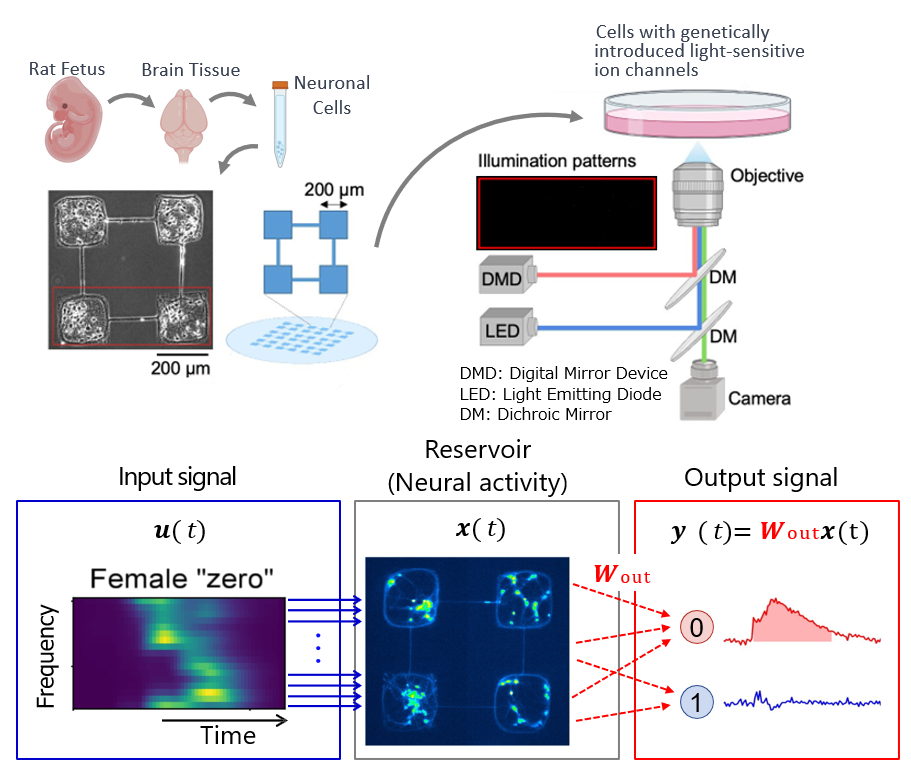

Implementing Reservoir Computing on Cultured Neural Networks

Artificial Intelligence and Machine Learning have developed by emulating the functions of biological brains. However, the intricate and flexible mechanisms of information processing in the brain, an assembly of neural cells, remain largely undisclosed. In this research, we tackle the challenge of elucidating these mechanisms by artificially constructing a small-scale brain using biological neurons. We have recorded the multicellular responses of the cultured neural networks using optogenetics and fluorescent calcium imaging and analyzed their computational capabilities within the framework of reservoir computing. The experiments showed that the “artificially cultured brain” possesses a short-term memory of a few hundred milliseconds, which can be leveraged to classify time-series data and has the capability of generalization. The findings of this study not only advance the understanding of the information processing mechanisms in living neural networks but also expand the potential for realizing new information processing mechanisms based on the “artificially cultured brain.”

Posters